What is Lexical Analysis?

Lexical Analysis is the first phase of a compiler where raw source code is scanned and broken into meaningful units called tokens. It reads the program character by character, grouping them into lexemes (words) and assigning them token classifications. Think of it like breaking a paragraph into words and punctuation before analyzing its meaning.

✅ Key Role: Converts messy human-written code into structured components for easier processing by later compiler stages.

🔎 Example:

Consider the source code:

int age = 25;After lexical analysis, it becomes:

int→ Keywordage→ Identifier=→ Operator25→ Literal (Number);→ Delimiter (End of Statement)

Why is Lexical Analysis Needed?

Lexical analysis is necessary because computers cannot understand raw programming languages the way humans do.

Without Lexical Analysis, the compiler would struggle with:

- Ignoring unnecessary elements like spaces, tabs, and comments.

- Efficiently recognizing words (tokens) instead of scanning character by character every time.

- Detecting errors early in the compilation process.

🔎 Analogy:

Imagine a grocery list written with extra spaces, comments, and unnecessary characters:

# Buy these items

Milk 1ltr // Get fresh milk

Eggs 12 // Buy eggs with extra space

Lexical analysis cleans this up to just:

Milk1ltrEggs12

This helps in further processing the data efficiently.

Components of Lexical Analysis

Lexical analysis has three core components:

1️⃣ Lexeme: A sequence of characters that matches a pattern and forms a meaningful unit.

- Example:

int,x,42

2️⃣ Token: A classification assigned to a lexeme (like a label).

- Example:

int(Keyword),x(Identifier),42(Number)

3️⃣ Pattern: A rule or regular expression that defines how lexemes are recognized.

- Example:

[a-zA-Z_][a-zA-Z0-9_]*(Pattern for valid variable names)

🔎 Example Breakdown:

For this code:

float price = 99.99;| Lexeme | Token Type | Pattern |

|---|---|---|

| float | Keyword | int |

| price | Identifier | [a-zA-Z_][a-zA-Z0-9_]* |

| = | Operator | = |

| 99.99 | Number | [0-9]+(\.[0-9]+)? |

| ; | Delimiter | ; |

Steps in Lexical Analysis

Lexical analysis works as an automated scanner that processes source code step-by-step.

📌 Step 1: Read Characters from Input

- The lexer reads the code character by character.

📌 Step 2: Recognize Lexemes and Match Patterns

- Groups characters into lexemes (words) based on pattern rules.

📌 Step 3: Convert Lexemes into Tokens

- Assigns a token (label) to each lexeme for easy classification.

📌 Step 4: Ignore Whitespaces and Comments

- Skips spaces, tabs, and comments since they are not needed for execution.

📌 Step 5: Handle Errors

- If a lexeme doesn’t match any pattern, an error is reported.

🔎 Example Walkthrough (Processing int x = 10;)

| Step | Character Read | Recognized Lexeme | Token Output |

|---|---|---|---|

| 1 | i |

int |

Keyword |

| 2 | x |

x |

Identifier |

| 3 | = |

= |

Operator |

| 4 | 1 |

10 |

Number |

| 5 | ; |

; |

Delimiter |

Handling Whitespaces, Newlines, and Comments

Lexical analyzers automatically remove unnecessary characters before further processing.

✅ Whitespaces: Skipped unless inside string literals ("Hello World").

✅ Newlines: Ignored unless used for formatting (Python indentation).

✅ All Comments: Entirely removed before tokenization.

🔎 Example (Before & After Lexical Analysis)

Source Code (Before Processing)

// This is a comment

int a = 5; // Variable declaration

float b = 3.14;After Lexical Analysis (Cleaned Up)

int a = 5;

float b = 3.14;Example: Implementing a Simple Lexical Analyzer in Python

This Python script simulates a basic lexer using regular expressions.

import re

# Define token patterns

token_patterns = [

(r'int|float|char', 'KEYWORD'),

(r'[a-zA-Z_][a-zA-Z0-9_]*', 'IDENTIFIER'),

(r'[0-9]+(\.[0-9]+)?', 'NUMBER'),

(r'=', 'OPERATOR'),

(r';', 'DELIMITER'),

(r'\s+', None) # Ignore whitespaces

]

def lexical_analyzer(code):

tokens = []

while code:

matched = False

for pattern, token_type in token_patterns:

regex = re.compile(pattern)

match = regex.match(code)

if match:

lexeme = match.group(0)

if token_type: # Ignore whitespaces

tokens.append((lexeme, token_type))

code = code[len(lexeme):] # Move to next lexeme

matched = True

break

if not matched:

raise ValueError(f"Unexpected character: {code[0]}")

return tokens

# Test lexer

code_sample = "int age = 25;"

tokens = lexical_analyzer(code_sample)

for lexeme, token in tokens:

print(f"{lexeme} -> {token}")✅ Output:

int -> KEYWORD

age -> IDENTIFIER

= -> OPERATOR

25 -> NUMBER

; -> DELIMITERTokenization

Tokenization is the process of breaking down a source program into a sequence of meaningful tokens. Each token is a fundamental unit of the language, representing a logical component like a keyword, identifier, or operator.

Token Structure

Each token consists of:

- Token Name: A unique identifier for the token category (e.g., "IDENTIFIER", "NUMBER", "KEYWORD").

- Lexeme: The actual sequence of characters in the source code (e.g.,

int,varName,123). - Attribute: Additional information about the token (e.g., pointer to a symbol table entry).

Types of Tokens

Tokens are classified into different categories:

Keywords

Keywords are reserved words in a programming language that have special meanings and cannot be used as identifiers.

- Examples in C:

if,while,return,for,break. - Examples in Python:

def,class,lambda,yield. - Examples in JavaScript:

function,var,let,const.

Identifiers

Identifiers are user-defined names for variables, functions, and objects.

- They must follow naming rules (e.g., in C, identifiers cannot start with a digit).

- Examples:

totalSum,myFunction,studentAge.

Literals

Literals are fixed values assigned to variables, such as numbers, characters, and strings.

- Integer literals:

42,-5,1000. - Floating-point literals:

3.14,0.001,-2.718. - Character literals:

'A','z','5'. - String literals:

"hello","CompilerDesign".

Operators

Operators perform computations and comparisons. They are categorized as:

- Arithmetic operators:

+,-,*,/,%. - Comparison operators:

==,!=,<,>,<=,>=. - Logical operators:

&&(AND),||(OR),!(NOT). - Bitwise operators:

&,|,^,~,<<,>>.

Special Symbols

Special symbols are punctuation marks or delimiters that structure the code.

- Separators:

;(end of statement),,(comma separator). - Brackets:

{}(block grouping),[](arrays),()(function calls).

Tokenization Process

The lexical analyzer (scanner) reads the source program character by character and groups them into tokens using:

- Pattern Matching: Uses regular expressions to define token rules.

- Finite Automata: Determines valid sequences of characters.

- Buffering Techniques: Improves efficiency by reading input in chunks.

Example Tokenization

For the input code:

int sum = 42 + 8;The lexer produces tokens:

| Lexeme | Token Name | Attribute |

|---|---|---|

| int | KEYWORD | int |

| sum | IDENTIFIER | Pointer to symbol table |

| = | OPERATOR | = |

| 42 | LITERAL | Integer |

| + | OPERATOR | + |

| 8 | LITERAL | Integer |

| ; | SPECIAL_SYMBOL | Statement Terminator |

Challenges in Tokenization

- Lookahead Issues: Differentiating between

=and==requires a two-character lookahead. - Whitespace Handling: Spaces are ignored except inside string literals.

- Handling Escape Sequences: Recognizing

\n,\tin strings. - Multi-line Tokens: Managing multi-line comments and string literals.

Regular Expressions & Finite Automata

Lexical analyzers use Regular Expressions (RE) and Finite Automata (FA) to define and recognize token patterns in programming languages. These mathematical models efficiently describe how characters are grouped into meaningful tokens, ensuring correct identification of keywords, literals, identifiers, and operators.

Regular Expressions (RE)

A Regular Expression (RE) is a sequence of characters that defines a search pattern. It is a formal way to describe patterns in strings and is widely used in lexical analysis.

Components of Regular Expressions

- Alphabet (Σ): A finite set of symbols. For example, in programming, Σ can be the ASCII character set.

- Concatenation: Joining two patterns sequentially. Example:

abmatches "ab". - Union (Alternation): Represents multiple choices. Example:

a|bmatches "a" or "b". - Kleene Star (*): Represents zero or more occurrences. Example:

a*matches "", "a", "aa", "aaa", etc. - Plus Operator (+): One or more occurrences. Example:

a+matches "a", "aa", "aaa", etc., but not "". - Optional Operator (?): Zero or one occurrence. Example:

a?matches "" or "a". - Character Classes: Defines a set of characters. Example:

[0-9]matches any digit.

Example Regular Expressions

| Pattern | Description | Example Matches |

|---|---|---|

[a-zA-Z_][a-zA-Z0-9_]* |

Valid Identifiers | var_name, _temp, count1 |

[0-9]+ |

Integer Numbers | 42, 123, 9999 |

[0-9]*\.[0-9]+ |

Floating-point Numbers | 3.14, 0.5, .75 |

"([^"\\]|\\.)*" |

String Literals | "hello", "C Compiler" |

(if|while|for|return) |

Keywords | if, while, return |

\+\+|\-|\*|\/ |

Operators | ++, -, *, / |

Finite Automata (FA)

Finite Automata are abstract machines used to implement lexical analyzers that recognize patterns defined by regular expressions. There are two main types:

Deterministic Finite Automata (DFA)

A Deterministic Finite Automaton (DFA) is a state machine where each state has exactly one transition per input symbol.

- Advantages: Fast execution, as there is no need for backtracking.

- Disadvantages: Requires more memory due to larger state tables.

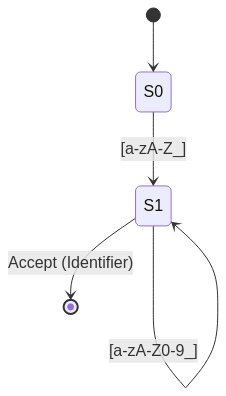

- Example DFA for Identifiers:

stateDiagram

[*] --> S0

S0 --> S1 : [a-zA-Z_]

S1 --> S1 : [a-zA-Z0-9_]

S1 --> [*] : Accept (Identifier)

Explanation:

- S0: Start state.

- S1: Moves to an identifier state if it starts with a letter or underscore.

- S1 (looping): Accepts alphanumeric characters and underscores.

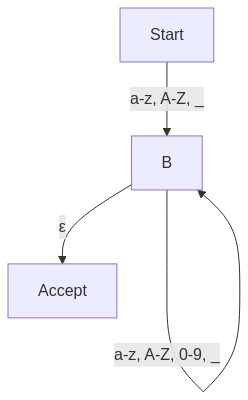

Nondeterministic Finite Automata (NFA)

A Nondeterministic Finite Automaton (NFA) allows multiple transitions for the same input, meaning multiple states can be active at once.

- Advantages: More compact representation.

- Disadvantages: Requires backtracking or additional computation.

- Example NFA for Identifiers:

graph TD

A[Start] -->|a-z, A-Z, _| B

B -->|a-z, A-Z, 0-9, _| B

B -->|ε| Accept

Converting NFA to DFA

An NFA can be converted into an equivalent DFA using the Subset Construction Algorithm. This process removes ambiguity by constructing deterministic states from multiple NFA states.

- Step 1: Identify ε-closures (states reachable without consuming input).

- Step 2: Construct DFA states from NFA states.

- Step 3: Define transitions uniquely for each input.

NFA vs. DFA: Comparison

| Feature | NFA | DFA |

|---|---|---|

| Number of transitions per state | Multiple allowed | One per input |

| Backtracking | Possible | Not required |

| Speed | Slower | Faster |

| Memory Usage | Lower | Higher |

| Conversion Complexity | Simple to construct | Requires subset construction |

Using FA in Lexical Analysis

- Each token type (keywords, identifiers, literals) is defined using a regular expression.

- The RE is converted into an NFA, which allows multiple paths.

- The NFA is then converted into a DFA to ensure efficient, backtrack-free scanning.

- The DFA is implemented in a scanner generator (e.g., Lex, Flex) or manually coded in the compiler.

Industry Applications

- Compilers: Tokenization in GCC, Clang, Java Compiler (Javac).

- Text Editors: Syntax highlighting in VS Code, Sublime Text.

- Search Engines: Pattern matching in Google Search.

- Network Security: Intrusion detection systems using regex filtering.

Lexical Errors

Lexical errors occur when an input sequence of characters does not match any valid token pattern defined by the programming language's lexical rules. These errors are detected at the earliest stage of compilation (lexical analysis) and can prevent further processing.

Importance of Detecting Lexical Errors

- Ensures syntax correctness before parsing begins.

- Prevents ambiguities in token recognition.

- Helps in debugging and error reporting.

- Essential for code editors and IDEs to provide real-time feedback.

Causes of Lexical Errors

Lexical errors arise due to:

- Use of illegal characters that do not belong to the language's character set.

- Malformed identifiers that violate naming conventions.

- Incorrect literals such as unclosed strings or malformed numbers.

- Unexpected tokens that break tokenization rules.

Common Types of Lexical Errors

Illegal Characters

Characters that are not part of the programming language's character set result in lexical errors.

Examples

- Example in C: The

#symbol is reserved for preprocessor directives but cannot appear in regular expressions.

int a = 10 # 5; // Error: Unexpected #

@ symbol is only allowed for annotations, not as an identifier character.

int @var = 5; // Error: Illegal character '@'

$ symbol is not valid in variable names.

$amount = 100 # Error: Unexpected '$' in identifier

Invalid Identifiers

Identifiers must follow specific naming conventions:

- Cannot start with a digit (e.g.,

1varis invalid in most languages). - Must not contain special characters (e.g.,

my-variableis invalid in C but valid in JavaScript). - Cannot use reserved keywords as variable names.

Examples

// Incorrect

int 1value = 10; // Error: Identifier cannot start with a digit

// Incorrect

int if = 5; // Error: 'if' is a reserved keyword

Unclosed String Literals

String literals must start and end with the correct delimiter, such as " or '. If the closing delimiter is missing, a lexical error occurs.

Examples

// Incorrect: Missing closing quote

printf("Hello world); // Error: Unterminated string literal

// Incorrect: Unmatched single quote

print('Welcome) // Error: Unclosed string literal

Invalid Numeric Literals

Numbers must follow proper formatting rules.

Examples

- Example (Incorrect Integer): Leading zeros can be interpreted as octal in some languages.

int num = 05; // Warning: Leading zero indicates an octal number

float x = 3..14; // Error: Unexpected '.'

Invalid Escape Sequences

Escape sequences are used inside string literals to represent special characters, but an incorrect escape sequence can cause a lexical error.

Examples

// Incorrect: \q is not a valid escape sequence

System.out.println("Hello \q World"); // Error

// Incorrect: \U starts a Unicode sequence but is incomplete

print("C:\Users\John") // Error: Incomplete Unicode escape sequence

Unterminated Comments

Multi-line comments must be properly closed; otherwise, the lexer will reach the end of the file without finding the closure.

Examples

/* This is a comment

printf("Hello, World!"); // Error: Missing '*/'

Detecting and Handling Lexical Errors

The lexical analyzer (lexer) detects errors using Finite Automata and follows these strategies:

- Error Reporting: The compiler highlights the position of the error.

- Error Recovery: The lexer may attempt to correct minor mistakes (e.g., replacing invalid characters).

- Skipping Unrecognized Tokens: The lexer may ignore invalid input and continue processing.

Example: Lexical Error Messages

int 1number = 10;

System.out.println("Hello World);

The compiler output may be:

Error: Invalid identifier '1number' (line 1, column 5)

Error: Unterminated string literal (line 2, column 27)

Real-World Applications

- Compilers (GCC, Clang, Javac): Detect lexical errors during preprocessing.

- IDEs (VS Code, IntelliJ): Provide real-time lexical error highlighting.

- Syntax Highlighters: Detect unclosed strings and invalid tokens.

- Static Code Analysis Tools (ESLint, PyLint): Identify invalid identifiers and symbols.

Summary of Lexical Errors

| Lexical Error Type | Description | Example |

|---|---|---|

| Illegal Characters | Characters not in the language. | int x = 10 @ 5; (Error: '@') |

| Invalid Identifiers | Incorrect variable names. | int 1var = 5; (Error: Cannot start with a digit) |

| Unclosed Strings | Missing string delimiters. | print("Hello; (Error: Unterminated string) |

| Invalid Numbers | Incorrect numeric formats. | float x = 3..14; (Error: Double dots) |

| Unterminated Comments | Comment block not closed. | /* This is a comment (Error: Missing '*/') |

Lexical Error Recovery Techniques

Lexical error recovery ensures that minor errors in the source code do not halt the compilation process completely. Instead of stopping execution on encountering an invalid token, modern compilers attempt to recover and continue processing the remaining code. This improves robustness and provides useful feedback to the programmer.

Types of Lexical Errors

Lexical errors occur when a sequence of characters does not match any valid token pattern defined by the language. Common lexical errors include:

- Illegal Characters: Characters not allowed in the language (e.g., `@` in C).

- Invalid Identifiers: Variable names starting with a digit (e.g., `1var`).

- Unclosed String Literals: Missing closing quotation marks (`"Hello`).

- Invalid Numeric Formats: Floating-point errors like `5..5`.

- Unknown Symbols: Special characters used incorrectly (`$value` in Java).

Panic Mode Recovery

The simplest and most commonly used method where the lexer discards characters until a valid token is found.

Example

int x = 5 @ 10;

In this case, `@` is an illegal character in C.

- Lexer Action: Skip `@` and continue scanning.

- Recovered Output: The lexer recognizes `<int> <x> = <5> <10>;` but reports an error at `@`.

Use Case

- Ideal for unrecognized symbols in the middle of a statement.

- Prevents the compiler from halting on a minor error.

Error Token Insertion

The lexer replaces an invalid token with a placeholder (e.g., `<TOKEN_ERROR>`) and continues scanning.

Example

1var = 10In Python, identifiers cannot start with a digit.

- Lexer Action: Replace `1var` with `<TOKEN_ERROR>` but allow further parsing.

- Recovered Output: `<TOKEN_ERROR> = 10`

Use Case

- Useful when handling minor lexing mistakes.

- Ensures that parsing can continue while marking the erroneous token.

Symbol Table Correction

For minor lexical mistakes, the lexer may suggest corrections based on the symbol table.

Example

whil (x < 10) {

System.out.println(x);

}

The keyword `whil` is misspelled, but the lexer detects it as a probable `while`.

- Lexer Action: Suggest `<while>` instead of `whil`.

- Recovered Output: `while (x < 10) { System.out.println(x); }`

Use Case

- Helps in typo detection for keywords and identifiers.

- Common in IDEs and modern compilers with autocorrect features.

Lookahead Correction

The lexer looks ahead to check if an error can be automatically corrected.

Example

int x = 5.5.3;

This is invalid because `5.5.3` is not a valid floating-point number.

- Lexer Action: Identify `5.5` as a valid floating-point number and `.3` as a separate token.

- Recovered Output: `int x = 5.5; .3;` (which may cause further errors in parsing but avoids lexical failure).

Use Case

- Effective for handling token boundary confusion.

- Ensures tokens are correctly split instead of marking the whole sequence invalid.

Logging & Reporting

Modern compilers maintain error logs instead of immediately stopping execution on lexical errors.

Example

In VS Code or IntelliJ, an incomplete statement like:

console.log("Hello;

Shows a warning: `Unclosed string literal` but allows further typing.

Use Case

- Helps developers debug efficiently.

- Widely used in modern IDEs and error-tracking systems.

Real-World Applications

- Compilers (GCC, Clang): Implement panic mode recovery to skip unknown symbols.

- IDEs (VS Code, IntelliJ): Use symbol table correction and logging to highlight errors.

- Interpreters (Python, JavaScript Engines): Apply lookahead correction to split ambiguous tokens.

- Static Analysis Tools (ESLint, PyLint): Use error token insertion to continue checking code.

Summary

| Recovery Technique | Action Taken | Example |

|---|---|---|

| Panic Mode Recovery | Skip invalid tokens until a valid token is found. | `int x = 5 @ 10;` → Skips `@`. |

| Error Token Insertion | Replace invalid tokens with `<TOKEN_ERROR>`. | `1var = 10` → `<TOKEN_ERROR> = 10`. |

| Symbol Table Correction | Suggests possible corrections for mistyped keywords. | `whil (x < 10)` → Suggests `while`. |

| Lookahead Correction | Splits ambiguous tokens correctly. | `int x = 5.5.3;` → Splits into `5.5` and `.3`. |

| Logging & Reporting | Logs lexical errors without stopping execution. | VS Code highlights `console.log("Hello;` as an error. |

Real-World Applications

Lexical Analysis is widely used beyond compiler design. Many real-world systems and software applications depend on efficient tokenization, pattern recognition, and lexical scanning to process and understand textual data. Below are some key areas where lexical analysis plays a crucial role.

Code Syntax Highlighting

Lexical analysis is used in Integrated Development Environments (IDEs) and text editors to recognize different programming constructs and apply appropriate syntax highlighting.

How it works:

- The lexer scans the source code and classifies tokens into categories such as keywords, identifiers, literals, and operators.

- Each token is assigned a color or style based on predefined rules.

- The syntax highlighter updates dynamically as the user types.

Examples:

- Visual Studio Code: Uses TextMate grammars to recognize token patterns.

- PyCharm: Implements lexical analysis for real-time syntax validation.

- Sublime Text: Uses regex-based lexers to highlight syntax.

Example:

Consider this Python snippet in an IDE:

def greet(name):

print("Hello, " + name + "!")

The lexer categorizes and highlights:

def(Keyword - Blue)greet(Function Name - White)name(Parameter - White)print(Built-in Function - Green)"Hello, "(String Literal - Yellow)

Keyword Extraction in Search Engines

Search engines such as Google and Bing use lexical analysis to extract meaningful words from user queries and match them against indexed web pages.

How it works:

- Lexical analysis splits the user’s query into tokens (words, numbers, special characters).

- Stop words (e.g., "the", "a", "of") are removed to focus on key terms.

- Lexical scanning applies stemming and lemmatization (e.g., "running" → "run").

- The parsed tokens are compared with indexed content for search ranking.

Examples:

- Google Search: Uses tokenization and stemming to optimize search results.

- Bing Search: Applies lexical analysis to analyze user intent.

- DuckDuckGo: Uses lexical parsing to avoid redundant processing.

Example:

Consider a user searching for:

"Best places to visit in Paris during winter"

The lexer processes it as:

- Keywords: "best", "places", "visit", "Paris", "winter"

- Stop Words Removed: "to", "in", "during"

- Indexed Matching: Results are ranked based on keyword relevance.

Command-Line Interpreters

Lexical analyzers are essential in command-line interpreters like Bash, PowerShell, and Zsh to process user commands efficiently.

How it works:

- The lexer reads the user input character by character.

- The command is broken into tokens (command, options, arguments).

- The parsed tokens are sent to the shell for execution.

Examples:

- Bash Shell: Tokenizes input like

ls -l /home. - PowerShell: Recognizes commands like

Get-Process | Sort-Object CPU. - Zsh: Uses lexical analysis for auto-completion and syntax validation.

Example:

Consider this Linux command:

grep "error" logfile.txt | sort | uniq -c

The lexer tokenizes the input as:

grep(Command - searches for a pattern in files)"error"(String Literal - search term)logfile.txt(Filename - argument)|(Pipe Operator - passes output to next command)sort(Command - sorts lines)uniq -c(Command - removes duplicates and counts occurrences)

Additional Real-World Applications

- Text Editors (MS Word, Google Docs): Use lexical analysis for spell checking and grammar correction.

- Natural Language Processing (NLP): Chatbots and AI assistants like Siri, Alexa, and Google Assistant tokenize spoken words for interpretation.

- Data Processing Tools: Big Data frameworks like Apache Spark and Hadoop tokenize unstructured data before analysis.

- Log Analysis Tools: Security software like Splunk parses logs using lexical scanners to detect anomalies.

Common Pitfalls & Debugging

Lexical analysis, while crucial for efficient compilation, has several challenges that can cause incorrect tokenization, performance issues, or incompatibility with modern programming needs. Below are common pitfalls in lexical analysis and their debugging strategies.

Handling Whitespace & Comments Efficiently

Whitespace and comments do not contribute to program execution but must be handled carefully during lexical analysis to avoid inefficiencies.

Problems Caused by Whitespace & Comments

- Unnecessary processing increases compilation time.

- Multi-line comments may cause unintentional nesting errors.

- Inconsistent tab and space handling can alter code behavior in whitespace-sensitive languages (e.g., Python).

Strategies for Efficient Handling

- Ignore Redundant Whitespace: The lexer should discard unnecessary spaces except inside string literals.

- Efficient Comment Removal: Single-line (

//) and multi-line (/*...*/) comments should be skipped early. - Lookahead for Block Comments: Ensure multi-line comments properly close before proceeding.

- Optimize Buffering: Use buffered reading to handle large files efficiently.

Example Issue & Debugging

Consider this JavaScript code:

let x = 10; // This is a comment

console.log("Value: " + x);

If the lexer fails to remove comments properly, the comment may interfere with parsing. A debugging strategy involves:

- Checking if the comment tokenization stops at `\n` for single-line comments.

- Ensuring `/*` is closed by `*/` in multi-line comments.

Resolving Ambiguous Tokens

Lexers must correctly distinguish between tokens that share prefixes (e.g., <= vs. < =).

1. Why Ambiguity Occurs

- Some operators overlap (e.g.,

=vs.==). - Spaces may or may not separate tokens.

- Lexical lookahead is required to decide token boundaries.

Example of Token Ambiguity

Consider this C++ expression:

if (x <= y)

if (x < = y)

The first line correctly recognizes <= as a single token, but the second line has ambiguity:

- Should it be interpreted as `<` and `=` separately?

- Or should the lexer expect a space-separated `=`?

Debugging Strategy

- Use greedy matching (prefer longest token first).

- Implement multi-character lookahead.

- Maintain a token buffer to store partially recognized sequences.

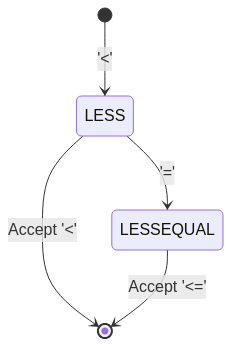

Solution in a DFA

To resolve `<=` vs. `<=`, the DFA transitions should be structured as:

stateDiagram

[*] --> LESS : '<'

LESS --> LESSEQUAL : '='

LESS --> [*] : Accept '<'

LESSEQUAL --> [*] : Accept '<='

Dealing with Unicode & Multi-Language Lexing

Modern compilers need to support Unicode characters, which introduces complexity in tokenization.

Challenges in Unicode Handling

- Identifiers in non-English languages: Programming languages like Python allow Unicode variable names.

- Byte Order Mark (BOM) Issues: Some UTF-encoded files may start with a BOM, which needs to be ignored.

- Multi-byte Characters: UTF-8 characters can span multiple bytes, requiring careful handling.

Examples of Unicode in Identifiers

Valid variable names in Python:

变量 = 100 # Chinese variable name

π = 3.14 # Greek letter as identifier

Debugging Strategies

- Use Unicode-aware tokenization: Extend identifier regex to include Unicode ranges.

- Normalize Unicode input: Convert all input to a standard form (e.g., NFC normalization).

- Handle UTF-8 correctly: Ensure lexers read multi-byte sequences properly.

Example Regex for Unicode Identifiers

[\p{L}_][\p{L}\p{N}_]*

This allows letters from any language in identifiers.

Real-World Applications

- Python: Supports Unicode variable names.

- JavaScript: Uses UTF-16 internally.

- Modern Editors (VS Code): Syntax highlighters recognize Unicode tokens.

Advanced Optimization for Industry Use

Optimizing lexical analysis is essential in industry applications where performance, memory efficiency, and speed are critical. Techniques such as DFA minimization and Just-In-Time (JIT) lexing help reduce overhead and improve real-time execution in modern compilers and interpreters.

DFA Minimization for Fast Scanning

Deterministic Finite Automaton (DFA) minimization is an optimization technique that reduces the number of states in a lexical analyzer, making token recognition faster and more efficient.

Why DFA Minimization Matters

- Large DFAs can cause high memory usage and slow execution.

- Minimization reduces redundant states, making scanning faster.

- Fewer states mean fewer computations per character.

Steps in DFA Minimization

The Hopcroft's Algorithm is widely used for minimizing DFAs:

- Step 1: Partitioning States

- Group states into accepting and non-accepting partitions.

- Step 2: Splitting Equivalent States

- States that behave identically (same transitions) are merged.

- Step 3: Constructing the Minimal DFA

- Eliminate unnecessary transitions.

- Ensure each state is reachable.

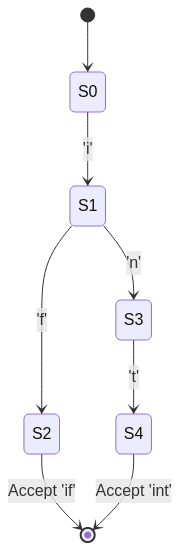

Example: Minimizing a DFA

Consider a DFA that recognizes the keywords "if" and "int".

Before Minimization:

stateDiagram

[*] --> S0

S0 --> S1 : 'i'

S1 --> S2 : 'f'

S1 --> S3 : 'n'

S3 --> S4 : 't'

S2 --> [*] : Accept 'if'

S4 --> [*] : Accept 'int'

After Minimization:

stateDiagram

[*] --> S0

S0 --> S1 : 'i'

S1 --> S2 : 'f' (if) / 'n' (int)

S2 --> [*] : Accept (if/int)

By merging states S3 and S4 into S2, we reduce the number of transitions, improving performance.

Real-World Applications

- GCC & Clang Compilers: Optimize lexical analysis using minimized DFAs.

- Regex Engines: Minimize finite automata for faster pattern matching.

- Security Tools: Use DFA minimization for efficient malware detection.

Implementing Just-In-Time (JIT) Lexing for Performance

Just-In-Time (JIT) lexing is an on-demand tokenization technique where lexing occurs only when required, rather than preprocessing the entire source code in advance.

Why JIT Lexing is Useful

- Reduces preprocessing time by analyzing only executed code paths.

- Improves memory efficiency by avoiding unnecessary token storage.

- Essential for dynamic and interpreted languages.

How JIT Lexing Works

- Traditional Lexer: Scans and tokenizes the entire source file before parsing.

- JIT Lexer: Tokenizes only the portion being executed.

Example: Traditional vs. JIT Lexing

Traditional Lexing (Static Compilation):

int main() {

int x = 10;

if (x > 5) {

printf("Hello");

}

}

The traditional lexer scans all tokens before parsing begins.

JIT Lexing (Dynamic Interpretation):

if condition():

execute()

JIT lexing only tokenizes the if-statement when `condition()` is called.

Real-World Implementations

- JavaScript V8 Engine (Google Chrome): Uses JIT lexing for optimized script execution.

- Just-in-Time Compilers (JITs): Dynamically analyze source code.

- Lua Interpreters: Perform JIT lexing for efficiency.